Large-scale language models, colloquially referred to as artificial intelligence by companies that sell rebranded autocomplete tools to other companies and the public, can automate many entry-level projects, but more complex ones If so, defects will be found immediately.

The paper argues that LLM/AI tools do not detect depression as easily as other skin tones when black people who use social media exhibit signs of depression in their prose.

Before you start your social justice rampage, PNAS, a magazine that claimed that herbicides magically turn frogs “gay” and that hurricanes with female names are more dangerous because of patriarchy, which got 800 Facebook posts. Biden administration claims that mandating and subsidizing electric vehicles could save $100 billion in health care costs as high-quality LLMs use much larger datasets before moving forward with race cards We should be skeptical, just as we should be skeptical.

Let’s get started. These kinds of claims pop up all over the place. Microsoft was called racist at one point because its Xbox Kinect tool didn’t detect black people as well as white people. Social justice thought that if there were more people of color at Microsoft, this problem would have been solved. The Kinect did a poor job of detecting white and Latino people in low light. Even if you test a drug on 1,000 people and it’s safe, testing it on 2,000 people won’t improve anything. No matter what the government says about FDA approval. From 2001 to 2005, the lawyer will pull out a fancy knife if he harms someone. San Francisco jury chosen because they believe no amount of testing can eliminate all risks and that plants are little people (and therefore herbicides can cause cancer in humans) Only someone would think that “further testing required” would lead to perfection.

If white people are creating the LLM or the data it contains, there really isn’t much of a way to embed racism, and the LLM doesn’t work that way. This is a graphical “AI”. Used Halloween photos to create what looks like a renaissance wizard at his fair.

It’s not like a close-up of me transformed into a wizard. But was it racism that caused my skin color to change so dramatically? If we monetized it with social currency or an actual kind of culture war, we would definitely argue if the skin color was reversed.

The most likely reason is that I’m clearly not very good at this. This example shows how many statistical errors almost all epidemiologists make. They feel like they’ve converged on the best answer and declare statistical significance, but if I ask you all to rate me on a scale of 9 to 10, the Internet will be the authoritative I can declare with statistical significance that I rate it 9.01. 1)

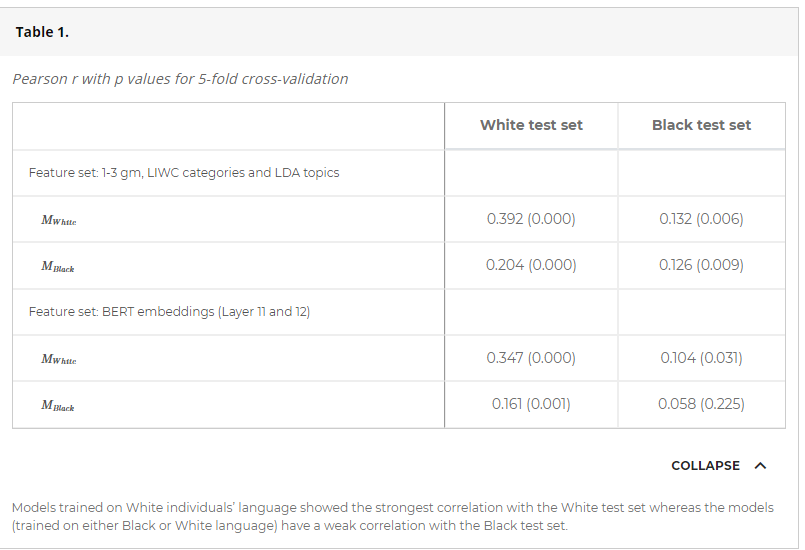

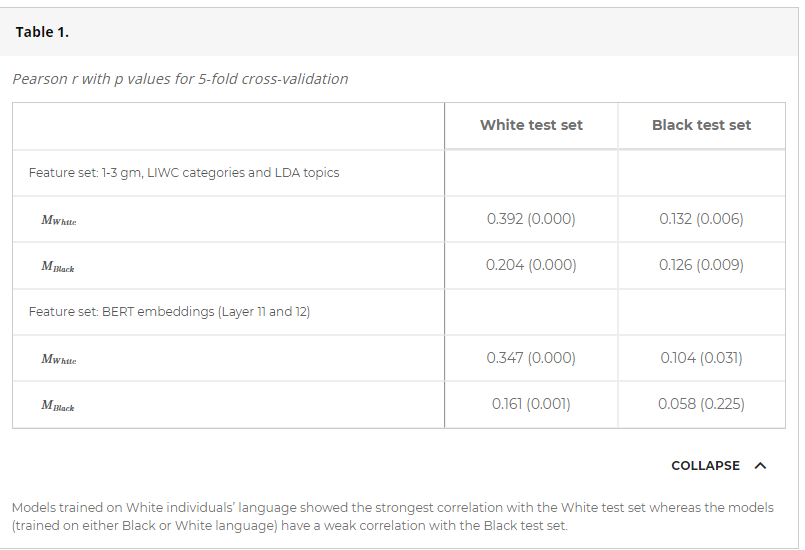

The reason AI may not “sense” mental health issues in people of color is because the signal words used by white people may be different than those used by Black and Latinx communities. Knowing that, the authors tried to adjust the model to use terms more likely to be used by Black people, but it still didn’t work. Regarding depression, the model was as follows. Predictive power is 3 times lower What happened to black people when it came to depression?

The social justice community may want to mandate that all American companies fire large numbers of people currently employed and hire demographics on par with America’s ethnic representation, but that’s not the same as a terrible sports team. It’s just a way to make it. Meritocracy and ability.

Rather, the problem is that too many companies and academics believe the hype about AI’s promise and ignore the reality. From organic food to solar panels, we have fallen prey to them in our culture, but they are expensive lessons to learn.

But what we shouldn’t be teaching is that AI is racist unless it’s written to suit a country’s changing demographics. A cop in New York and an anti-science activist in San Francisco are completely unrelated, but they both vote Democratic, which would converge to an average-like template of both for the AI. That’s why no one should be scared of AI unless they believe what it says.

Note:

(1) Do you think that will not happen? If donors take on our epidemiology program, we can show that not only does it happen, but that it is the default at IARC, NIEHS, and the Harvard School of Public Health.